Technical Breakdown: Generalization and Network Design

How Yann LeCun's Design Strategies Enabled Networks to Learn Beyond Memorization

Summary

Generalization: Focuses on the ability of neural networks to correctly handle unseen data, emphasizing its importance over simply memorizing training examples.

Network Constraints: Introduces methods like weight sharing and local connectivity to reduce network complexity and improve performance.

Weight Space Transformation: Explains how to strategically reduce parameters without losing computational capability, enhancing both speed and accuracy.

Impact on AI: Influenced modern convolutional neural networks (CNNs) and contributed significantly to practical AI applications.

Introduction

In the previous article on backpropagation, we explored Rumelhart, Hinton, and Williams’ demonstration of how multilayer neural networks could effectively learn complex representations. However, despite this powerful advancement, neural networks still faced a major challenge: generalization.

Yann LeCun’s 1989 paper Generalization and Network Design Strategies tackled this exact challenge by providing clear guidelines for improving a neural networks’ ability to generalize, highlighting that performance on unseen data is the true test of learning.

What Problem Does This Paper Solve?

Despite the advancements in training neural networks, early models often memorized training data rather than truly "learning" it, leading to poor performance on new, unseen examples. This phenomenon, called overfitting, limited the practical effectiveness of neural networks.

LeCun addressed this by exploring how network design strategies could drastically enhance the generalization capabilities of neural networks.

An intuitive analogy LeCun provided is curve-fitting:

If a model has too many parameters, it fits the training data perfectly but fails to capture the underlying trend (overfitting).

Conversely, too few parameters limit the model's ability to represent the data accurately.

The ideal model balances complexity with the number of training examples.

Key Ideas in the Paper

1. Network Constraints for Improved Generalization

LeCun introduced specific constraints in neural network design, significantly reducing overfitting:

Local Connectivity: Instead of fully connecting every neuron, neurons connect only to localized groups, inspired by the human visual system.

Weight Sharing: Connections across different parts of the network share weights, reducing the total number of parameters while preserving computational power. This concept became foundational in convolutional neural networks (CNNs).

2. Weight Space Transformations (WST)

Building upon the foundational ideas from the previous backpropagation paper, LeCun expanded on using transformations to simplify the weight space:

WST methods, especially weight sharing, drastically reduce the number of parameters by applying symmetry constraints.

This not only improved generalization but also accelerated training by reducing complexity.

This concept was crucial in enabling practical neural network training, especially in vision tasks.

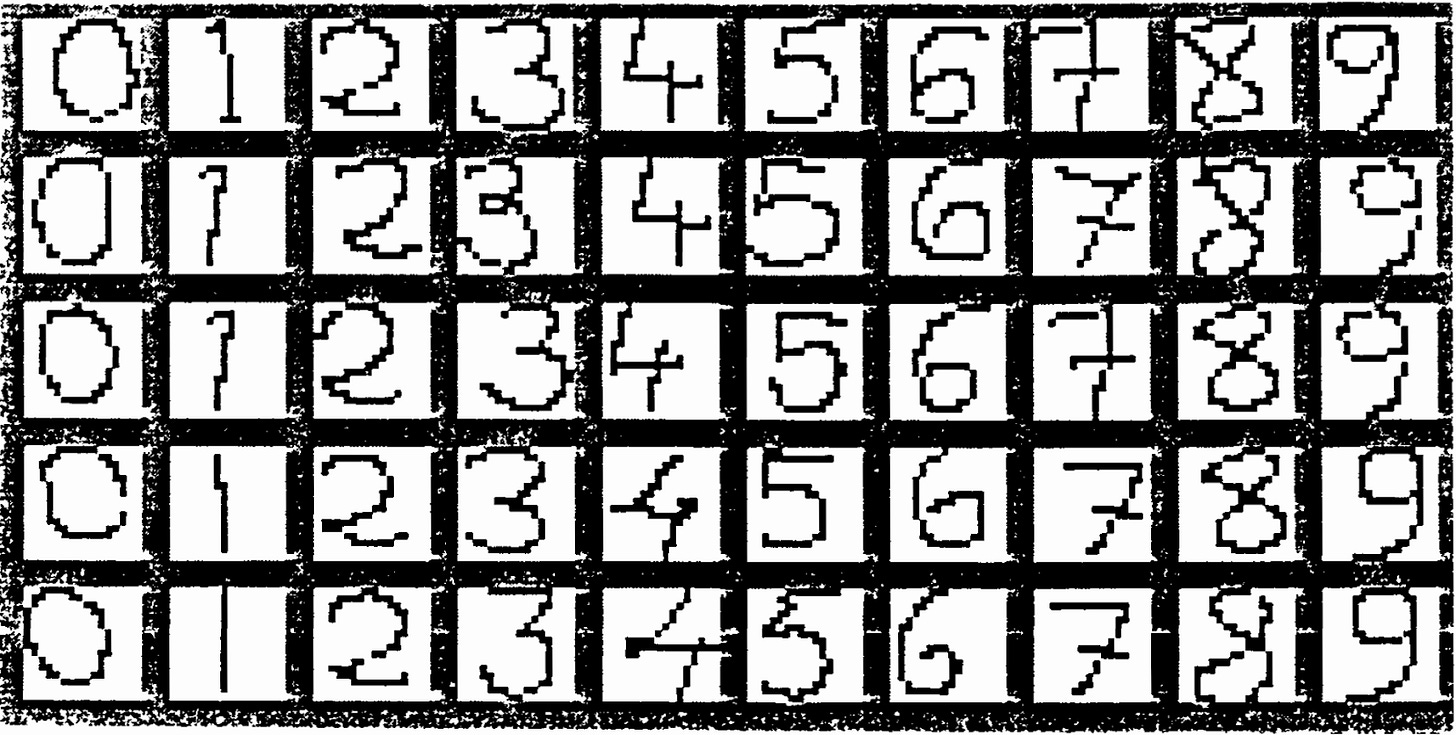

3. Practical Demonstration: Digit Recognition

LeCun illustrated these ideas through a practical example: recognizing handwritten digits.

He compared several network architectures:

Single-layer fully connected networks performed poorly, quickly overfitting.

Multi-layer, locally connected networks performed better but still faced generalization challenges.

Networks with weight-sharing constraints achieved significantly better performance (up to 98.4% accuracy), clearly demonstrating the value of architectural constraints.

Why Is This Important?

LeCun’s work provided a direct solution to one of neural networks' biggest limitations: their tendency to memorize rather than generalize. By integrating explicit constraints:

Improved Accuracy: Networks reliably generalized better to unseen data.

Reduced Complexity: Constraints led to simpler, more efficient models that retained powerful learning capabilities.

Scalable Design Principles: Laid down fundamental principles for designing networks tailored to specific tasks, like image and speech recognition.

How This Connects to Modern AI

This paper provided foundational insights now embedded in the heart of modern AI systems:

Convolutional Neural Networks (CNNs) directly stemmed from LeCun’s constrained architectures and are central to today's image processing tasks.

Regularization Methods: Concepts like weight sharing evolved into modern regularization techniques that improve neural networks' ability to generalize.

Practical Applications: These principles directly inform how neural networks are designed today, influencing AI applications from medical imaging to autonomous driving.

Conclusion: Designing Networks that Truly Learn

LeCun’s 1989 paper moved neural network design from a purely theoretical exercise into practical engineering. By emphasizing the importance of generalization and introducing network design strategies, he provided a clear path to creating neural networks that truly learn, not just memorize.

The leap made in this paper was understanding that the architecture itself can guide a network's ability to generalize. By carefully imposing constraints, LeCun showed that neural networks could effectively balance complexity and learning ability.

Today, the quest to design efficient networks continues. While LeCun’s strategies have become standard practice, new challenges in network architecture continue to push us towards uncovering the true potential of neural networks.

Whether we have fully uncovered this potential, or if the best designs are still ahead, remains an exciting and open-ended question.